As we’ve shared previously - networks are the circulatory and nervous systems of your enterprise. Indeed; “the network is the computer”, and networks are an invaluable source of ground-truth. From a communications perspective, you can learn a lot by directly observing who is speaking, to whom they are speaking, the language they are using, the tone of voice, and the content of the communications. When it comes to network communications and metadata the analogies are obvious: in the case of DNS traffic- mapping URLs into IP addresses, and identifying underlying services and connected devices - in the case of TLS protocol, getting detailed insights into the encryption capabilities and device characteristics - likewise the GTP protocol provides geo-location specific information, and in the case of the MODBUS protocol, understanding the communications within industrial control systems.

Should legacy network telemetry be considered network metadata?

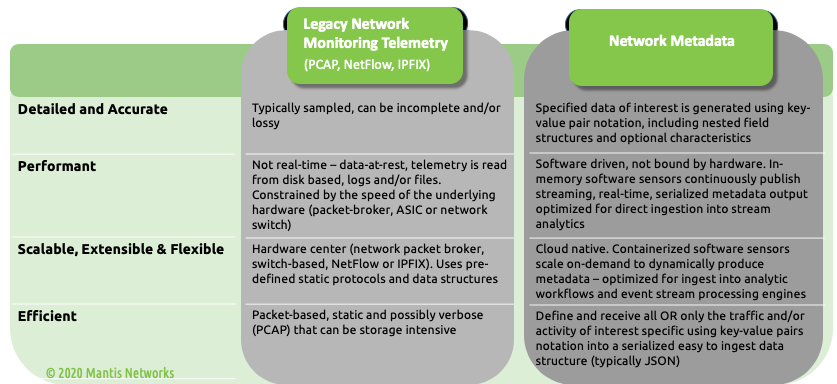

Generally speaking, metadata is “data about data.” However, not all data is metadata, and not all metadata is equally useful and valuable. Indeed, in the effort to capitalize on the latest AI, ML and “data-science” sounding buzzwords - many legacy network monitoring technology vendors (mis)use the term metadata to mistakenly describe legacy network telemetry data types as metadata in an attempt to provide some value-add utility to security and data science teams. Specifically, they extend the term metadata to include static packet capture (PCAP), Logs, SNMP, NetFlow and IPFIX data. While these legacy forms of network telemetry are interesting and useful, and while they were once cutting-edge in that they provided (what at the time was considered) the most accurate and revealing insights into network traffic they provide limited context to tell us about modern attacks. However, these legacy forms of telemetry are NOT network metadata in the contemporary sense.

NetFlow/IPFIX/PCAP/SNMP are all primary forms of data and are not metadata by definition. Sure, SNMP data can contain metadata, but that is limited, and requires polling. Calling these things metadata is disingenuous at best. Nor are those legacy data types efficient and optimized for today’s network architectures, storage/indexing and analytic systems uses. They are merely a sampled or filtered form of the actual network activity, or in the case of SNMP, PCAP and logs, a replication of the traffic of interest, an accounting of the traffic and/or a description of the state of equipment connected to the network…delivered after the fact, as a filtered or sampled static representation.

What is network metadata?

Conversely, network metadata in the contemporary sense is far more dynamic, powerful and useful. Network metadata provides much needed context about the network communications that also includes nested value structures and optional field characteristics, providing more information than legacy network telemetry and a valuable resource for network detection and response. Some of the points we want to emphasize are:

- Network metadata is serialized, at wire speed, in formats that can be natively ingested by existing data science tools

- NetFlow and IPFIX, when they are used for something other than “flow” stats, may possibly contain valuable metadata ... totally dependent on the smarts they have coded into their application, though it is still in a format that requires a “collector” to capture and parse the underlying metadata. (NOT natively ingestible for data science and NOT real-time)

- PCAP data, is raw packets, not metadata.

- SNMP metadata may indicate something about the types of devices that are communicating on the network but it will be limited to the devices that are using SNM, a legacy protocol, by any standard.

How is network metadata used?

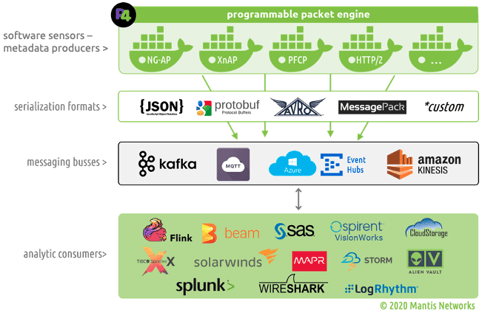

Additionally, new forms of network metadata consist of highly efficient, high-resolution and reliable data structures produced by software sensors (that don’t require polling) -- that allow the programmer / user to target and extract specific traffic of interest using key-value pair notation to produce streaming formats (typically JSON) via scalable, fault-tolerant, publish-subscribe messaging systems (such as Kafka).

Rather than being just a static snapshot of network traffic or the state of network equipment; advanced network metadata is dynamic, structured as key-value pairs and used in combination with data science tools and techniques to facilitate the transformation of unstructured network traffic into more efficient and detailed forms of intelligence that can be extracted, modeled, analyzed and characterized by relevant criteria, resources, behaviors, patterns, and associations. We previously wrote about real-time DNS observability and managing DNS workflows leveraging DNS metadata to highlight these points.

Rather than being just a static snapshot of network traffic or the state of network equipment; advanced network metadata is dynamic, structured as key-value pairs and used in combination with data science tools and techniques to facilitate the transformation of unstructured network traffic into more efficient and detailed forms of intelligence that can be extracted, modeled, analyzed and characterized by relevant criteria, resources, behaviors, patterns, and associations. We previously wrote about real-time DNS observability and managing DNS workflows leveraging DNS metadata to highlight these points.

Since network metadata provides additional context around network communications, and can be structured for efficient ingest to follow-on analytic systems and processes, the usefulness in cybersecurity and network monitoring are critical. Metadata can be used to help investigate indicators of compromise and unauthorized or rogue connections, and provide a reliable and accurate streaming data source for AI/ML models to help with anomaly detection and response. As network traffic data volumes and speeds increase, improving detection with metadata can help better process the increase in data and reduce the time it takes to identify a potentially malicious activity or underperforming resource, and if need be pipe the network data to colder storage for future use saving on storage and compute costs.

The power and utility of these new forms of advanced network metadata is further amplified when deployed in combination with data science tools and techniques. Wikipedia defines data science is a "concept to unify statistics, data analysis, machine learning and their related methods in order to understand and analyze actual phenomena with data. It employs techniques and theories drawn from many fields within the context of mathematics, statistics, computer science.” Network (IT) operations and security teams are leaning on data science and ML/AI models in addition to human investigation and analysis to help make decisions based on the volumes of data they monitor. Additionally, metadata is identified as having “investigative value” as published in a Gartner research note, “Applying Network-Centric Approaches for Threat Detection and Response,” published on March 18, 2019 (ID: G00373460) by Augustos Barros, Anton Chuvakin and Anna Belak noted “Hence, rich metadata and file capture deliver much better investigative value – it is easier and faster to find things – at a much lower computational and storage cost.“

Consequently, network metadata is emerging as the common standard for monitoring and control applications, as the most accurate and reliable way to interact and communicate information pertaining to the current state and behavior of networks.

Network metadata provides deeper, dynamic and flexible forms of control and visibility into network headers, payloads, the sources, destinations, protocols, and characteristics of the traffic flows they are part of. Organizations looking for a better, faster, more efficient and accurate solution to monitoring and managing their networks should be embracing these advanced network metadata technologies as they look to stay on the leading-edge by leveraging data-science processes and practices.

Network Metadata Take-Aways:

- Fast: Reduces time-to-detect and respond with real-time in-memory processing

- Complete: Enables visibility across the protocol stack (L2 – L7)

- Flexible: Appliance or SaaS / cloud based to scale on-demand and run in any environment - on-prem, or in cloud from the core to the edge. Support for advanced traffic analysis (entropy, RegEx…) or custom protocol interrogation

- Efficient: Target and receive specific protocols, attributes traffic of interest via an efficient binary serialization formats